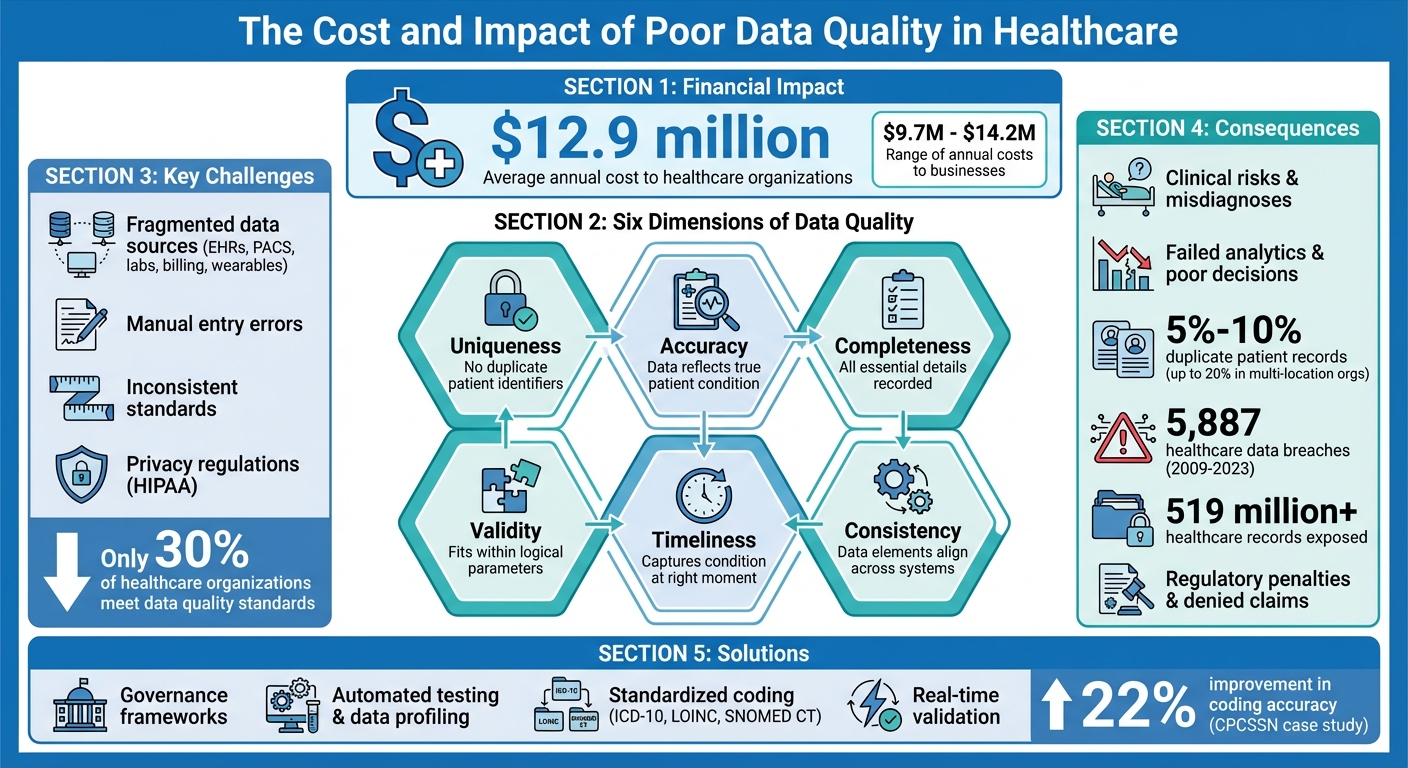

Data quality is the backbone of healthcare analytics. Without accurate, complete, and timely data, insights from predictive, prescriptive, and descriptive analytics can lead to poor decisions, jeopardizing patient safety and operational efficiency. In healthcare, poor data quality costs organizations an average of $12.9 million annually and poses risks like misdiagnoses, regulatory penalties, and financial losses.

Key takeaways:

- Six dimensions of data quality: Accuracy, completeness, consistency, timeliness, validity, and uniqueness.

- Challenges: Fragmented data sources, manual entry errors, inconsistent standards, and privacy regulations.

- Consequences: Clinical risks, failed analytics, financial losses, and compliance issues.

- Solutions: Governance frameworks, technical tools (like automated testing and data profiling), and standardized coding systems.

Improving data quality requires embedding quality controls at every stage - from data entry to analytics. This ensures reliable insights for better patient care and operational success.

The Cost and Impact of Poor Data Quality in Healthcare

What's Data Quality Got to Do With It?

Challenges in Maintaining Data Quality for Healthcare Analytics

Healthcare organizations face a mix of technical, operational, and regulatory hurdles that make ensuring data reliability a tough task. These issues range from system limitations to compliance requirements, often clashing with one another. Understanding these obstacles is crucial for creating analytics platforms that can consistently deliver reliable insights. From data entry to integration, every stage of the process is affected.

6 Dimensions of Data Quality

One of the core challenges lies in preserving integrity across six critical dimensions of data quality.

- Accuracy: Ensures the data truthfully reflects the patient’s condition.

- Completeness: Requires all essential details to be recorded, such as both systolic and diastolic blood pressure readings.

- Consistency: Makes sure data elements align - for example, a diabetes diagnosis should be paired with a metformin prescription.

- Timeliness: Focuses on whether the data captures the patient’s condition at the right moment, which is especially vital for real-time decisions and emergencies.

- Validity: Checks if the data logically fits within known parameters.

- Uniqueness: Prevents duplicate patient identifiers across systems.

Each of these dimensions is essential to healthcare operations. As Ramya Avula from Carelon Research aptly puts it:

"The performance of predictive analytics in healthcare is fundamentally dependent on the quality of the data ingested by predictive models".

When even one of these dimensions falters, the entire analytics process - whether it’s clinical decision-making or managing population health - faces setbacks.

Fragmented Data Sources and Poor Interoperability

Healthcare data is scattered across various systems, including electronic health records (EHRs), picture archiving and communication systems (PACS), laboratory systems, billing platforms, and even wearable devices. This fragmentation creates significant challenges because each system operates with its own formats and terminologies.

The lack of standardization compounds the issue, as data from different institutions often cannot be seamlessly integrated. For instance, one hospital may record weight in pounds, while another uses kilograms. Even common metrics like smoking status are often recorded differently.

A practical example of tackling this issue comes from the Canadian Primary Care Sentinel Surveillance Network (CPCSSN). Between May and August 2013, they worked with 61 healthcare providers in Ontario to implement a Data Presentation Tool. By standardizing terminology - like using kilograms exclusively for weight and unified terms for smoking status - they improved the coding accuracy for five chronic conditions by 22%.

Data Entry and Integration Errors

Manual data entry remains a major source of errors. Under time pressure, clinicians and administrative staff may make typos, choose incorrect codes, or leave required fields blank. Inconsistent units, mismatched date formats, and duplicate patient identifiers further muddy the waters, making reliable analysis difficult.

These technical issues often lead to real-world consequences. Taylor Larsen from Health Catalyst highlights how structural problems - like missing values in critical fields or non-unique encounter identifiers - can prevent data from being linked across different areas. For example, when lab results and medication records aren’t properly connected, clinicians are forced to make decisions based on incomplete information.

Governance and Privacy Constraints

Regulations like HIPAA add another layer of complexity to managing data quality. While these frameworks emphasize maintaining data integrity, they can also create barriers to accessing and validating information. Many organizations adopt overly cautious data management practices, prioritizing restrictions over usability, which makes it harder for authorized users to identify and fix errors.

Without clear data ownership or formal strategies, only 30% of healthcare organizations meet data quality standards. This often results in silos and conflicting definitions of metrics. As one Health Policy Analyst noted:

"The way that you collect that data, and also define what data actually means is so important. You know, a piece of data as far as what it means to us doesn't necessarily mean the same thing to the provider".

This disconnect fosters silos where departments define and measure the same metrics differently. For instance, the Emergency Department might count "patients treated" one way, while the Billing Department uses another definition, leading to inconsistent analytics and mistrust. When governance is decentralized and validation rules are weak, these inconsistencies ripple across the organization, undermining confidence in the data at every level. Without a streamlined governance framework, every step of data analysis becomes compromised.

How Poor Data Quality Affects Healthcare Outcomes

Poor data quality disrupts clinical care, drains financial resources, and exposes organizations to regulatory risks. Issues like incomplete, inaccurate, or outdated information directly contribute to these challenges.

Clinical Risks and Patient Safety

Inaccurate or incomplete data can jeopardize patient safety. Errors of omission - when critical details are missing - and errors of commission - when wrong information leads to poor decisions - can have serious consequences. For instance, failing to record a patient’s drug allergy might result in prescribing a harmful medication, like penicillin to someone allergic. Similarly, incorrect blood pressure readings could lead to a misdiagnosis of hypertension, while delays in lab results may force clinicians to act on outdated information.

Another problem is the variability poor data introduces into clinical datasets. This "noise" can lead to statistical errors, such as false positives (Type 1 errors) or false negatives (Type 2 errors), skewing treatment outcomes and potentially causing harm. As Francis Lau, Editor of the Handbook of eHealth Evaluation, aptly puts it:

"The consequence of poor-quality data can be catastrophic especially if the care provided is based on incomplete, inaccurate, inaccessible or outdated information from the eHealth systems".

When data is inconsistent or incomplete, clinicians struggle to get a comprehensive view of a patient’s health, making informed care decisions even harder.

Failed Analytics and Decision-Making

Healthcare organizations increasingly rely on analytics to guide decisions, but poor data quality undermines these efforts. For example, population health dashboards built on flawed data can misrepresent disease prevalence or risk factors. A diabetes diagnosis might appear in records without corresponding HbA1c test results, or metformin prescriptions might exist without a documented diabetes diagnosis.

Predictive models also suffer when trained on incomplete or biased datasets. These flawed models can produce inaccurate forecasts for patient outcomes, resource allocation, or the effectiveness of interventions. Between 2020 and 2022, the CDC published around 90 scientific articles on COVID-19 based on large healthcare datasets, highlighting the importance of reliable data during public health crises. When data quality falters, these analytics lose their credibility, potentially leading to misguided policies and practices. Beyond analytics, these issues spill over into operational inefficiencies and financial losses.

Operational and Financial Costs

The financial impact of poor data quality is staggering. Studies estimate it costs businesses between $9.7 million and $14.2 million annually. In healthcare, this often translates to duplicate tests, billing errors, and denied claims, all of which delay revenue and waste resources.

Duplicate patient records are a common issue, affecting 5%–10% of cases - and up to 20% in organizations with multiple locations. These duplicates not only increase administrative workloads but also heighten the risk of errors. Resolving discrepancies in inaccurate data often requires manual intervention, which delays treatments and creates bottlenecks in administrative processes. Over time, this erodes trust in automated systems, prompting staff to rely on manual methods, which ironically introduce even more errors.

This inefficiency affects productivity too. Skilled clinical staff may find themselves spending more time fixing data errors than caring for patients, leading to backlogs and reduced efficiency. Addressing these issues requires strong data governance practices, which will be discussed later.

Regulatory and Reimbursement Risks

Poor data quality also brings compliance and reimbursement challenges. Misidentifying patients through duplicate or overlapping records increases the likelihood of HIPAA violations and unauthorized data exposure. From 2009 to 2023, there were 5,887 healthcare data breaches involving at least 500 records, exposing over 519 million healthcare records.

The healthcare industry’s shift from fee-for-service to value-based care models further highlights the need for accurate data. The American Health Information Management Association (AHIMA) emphasizes this point:

"As the velocity, variety, and volume of electronic health information continues to grow, and as providers and payers continue to move from a fee-for-service to a value and outcome-based reimbursement model, the need for high-quality data will become increasingly important".

Errors in clinical documentation or coding can lead to denied claims, increased administrative burdens for appeals, and lost revenue in outcome-based reimbursement models. Moreover, reporting mistakes to CMS can trigger audits and penalties, while inconsistent data standards leave organizations vulnerable to regulatory failures.

sbb-itb-116e29a

Strategies and Tools for Improving Data Quality

Elevating data quality in healthcare demands a mix of strong governance, advanced technical tools, and a commitment across the organization. When healthcare providers adopt these strategies, they can minimize errors, enhance patient outcomes, and build confidence in their analytics systems.

Data Governance Frameworks

A reliable governance framework ensures clear accountability for data quality throughout the organization. Data stewardship assigns specific individuals or teams to monitor data accuracy, consistency, and compliance. These stewards work with standardized policies that outline how data should be entered, managed, and shared.

Data dictionaries are a key component, offering consistent terminology and definitions across departments. This consistency reduces misunderstandings and misinterpretations. As HealthIT.gov points out:

"A structured data governance framework is essential for ensuring that healthcare data is accurate, reliable, and fit for purpose."

In 2023, the Canadian Primary Care Sentinel Surveillance Network (CPCSSN) implemented a comprehensive governance framework. This initiative, led by Dr. Greiver, included automated data cleaning algorithms and collaboration among healthcare providers. The result? A 22% improvement in coding chronic conditions within electronic medical records. Alongside governance, technical methods play a critical role in maintaining data integrity.

Technical Practices for Data Quality Management

Technical practices like data profiling help identify errors and inconsistencies before data enters analytics workflows. One effective tool is the Data Quality Probe (DQP), which uses clinical queries to pinpoint specific issues. For instance, a DQP might flag a diabetes diagnosis missing a corresponding HbA1c test result.

Standardized coding systems, such as ICD-10, LOINC, and SNOMED CT, ensure clinical concepts are uniformly understood. Each codeable concept should include a code, a display name, and a code system identifier.

Integration and Interoperability Approaches

Fragmented systems demand strong integration strategies. Standards like HL7 and FHIR APIs enable seamless data exchange between electronic health records, lab systems, and analytics platforms. Centralized data warehouses bring together information from multiple sources to act as a single reference point for analytics. Simple Assessment Modules (SAMs) can even evaluate patient messages in real time - such as clinical summaries or lab reports - and provide pass/fail results based on specific quality standards. This type of instant validation helps catch errors before they spread through downstream systems.

Organizational Practices for Sustained Data Quality

Achieving data quality isn’t just about technology - it requires a cultural shift within the organization. A "quality culture" ensures that every stakeholder values and takes responsibility for data accuracy. Feedback loops allow cleaned and standardized data to be sent back to providers, helping them understand the impact of their entries and identify areas for improvement. Regular audits, supported by automated probes, can uncover errors of both omission and commission.

As Taylor Larsen from Health Catalyst explains:

"The only way to ensure healthcare organization leaders, managers, and providers have data fit for critical decision making... is to establish quality at the beginning of the data life cycle and maintain it throughout all processes".

Continuous improvement cycles are key, involving collaboration among leadership, managers, subject matter experts, and analytics professionals to refine quality standards. With these practices in place, specialized platforms can embed quality controls at every stage of the process.

Custom Solutions with Scimus

By combining governance, technical, and organizational strategies, custom solutions ensure data quality is built into every step of the analytics lifecycle. Scimus creates healthcare analytics platforms that incorporate quality controls right from the start. Instead of treating quality as an afterthought, Scimus embeds validation rules, automated testing, and monitoring tools into the platform's architecture.

These tailored solutions include data governance frameworks aligned with specific workflows, automated cleaning algorithms to standardize clinical terminology, and real-time quality dashboards that provide a clear view of data health. Scimus also offers ongoing support to adapt these systems as healthcare standards evolve and new data sources emerge.

Integrating Data Quality into the Healthcare Analytics Lifecycle

When it comes to healthcare analytics, ensuring data quality isn't just a "nice-to-have." It's a must-have, woven into every stage of the analytics lifecycle. Rather than scrambling to fix issues after they arise, it's smarter - and far more effective - to embed quality controls from the start. By doing so, you create a system where clinicians and administrators can confidently rely on the insights they receive.

Defining Quality in Requirements and Architecture

The journey begins during the design phase. Think of data as a strategic asset that requires clear quality standards from day one. Before a single line of code is written, you need to define what "quality" means for your specific use case.

Focus on five critical dimensions: Correctness, Completeness, Concordance, Plausibility, and Currency. Each dimension should have clear thresholds. For instance, completeness might mean that every blood pressure reading includes systolic, diastolic, and timestamp values. Concordance could require that a diabetes diagnosis is always supported by an HbA1c test result.

Data lineage modeling is another key step in the planning process. It helps trace quality issues from their source to downstream analytics, ensuring errors don’t spread unnoticed. A two-stage architectural framework often works best: the first stage enforces domain constraints and relational integrity at the source system level, while the second ensures semantic consistency when integrating data from multiple locations.

Subject Matter Experts (SMEs) play a crucial role here. They provide the clinical context necessary to determine whether the data makes sense. For example, they might flag a recorded heart rate that doesn’t align with a patient’s age or condition. Without their input, technical validation alone might miss critical issues.

Once quality is baked into the architecture, the next step is rigorous testing.

QA and Testing for Analytics Platforms

Testing healthcare datasets is a bit more complex than standard software QA. Tools like Data Quality Probes (DQPs) are essential. These probes use predefined clinical queries to catch inconsistencies. For example, a probe might flag a patient with a diabetes diagnosis but no HbA1c test result, or a prescription for penicillin given to someone with a documented allergy to it.

Unit tests are another critical tool, especially in the early stages of the data pipeline. They help catch errors before they spread further. These tests check both the structure and content of the data, ensuring it complies with U.S. standards like currency formats (USD), date formats (MM/DD/YYYY), and temperature units (Fahrenheit).

Automated health checks add another layer of protection. These checks can monitor for issues like missing data or inconsistent values and stop the data flow if critical problems are detected. Multi-stage validation takes this further by applying rules for format, cross-table consistency, and state-dependent logic - such as ensuring a discharge record always follows an admission.

A real-world example of effective testing comes from the Canadian Primary Care Sentinel Surveillance Network (CPCSSN). Between May and August 2013, they introduced a Data Presentation Tool across a family health team in Ontario. By automating data cleaning - like standardizing all weights to kilograms and unifying HbA1c terminology - they improved coding accuracy for five chronic conditions by 22%. This also enabled the creation of reliable disease registries.

Once testing measures are in place, it's vital to maintain quality through continuous lifecycle management.

Lifecycle Management for Models and Analytics

Data quality isn't a "set it and forget it" process. Analytics platforms need ongoing monitoring and updates to stay relevant as clinical guidelines evolve and new data sources emerge. Regular recalibration of predictive models ensures they remain accurate as patient demographics and treatment protocols change.

For AI and machine learning models, bias monitoring is especially important. Poor-quality data during development can introduce hidden biases, leading to flawed clinical decisions. Regular audits of training data can help catch these issues early.

Service Level Agreements (SLAs) can also help maintain high standards. These agreements set benchmarks for data reliability, including factors like freshness, volume, and accuracy. Column-level lineage mapping further supports this by making it easier to trace anomalies back to their source, speeding up root cause analysis.

Finally, continuous improvement cycles are key. Collaboration between leadership, clinical SMEs, managers, and analytics teams ensures that quality standards evolve alongside the platform. By working together, these teams can refine processes and keep the system running smoothly as it matures.

Conclusion: Building Trust Through Better Data Quality

Data quality isn’t just a task to check off - it’s the backbone of trust in healthcare analytics. When data is accurate, complete, and timely, it empowers faster, more confident decisions that directly enhance patient care and streamline operations. On the flip side, poor data quality erodes trust, leading to issues like misdiagnoses and denied insurance claims.

Beyond its clinical importance, maintaining high data quality is a regulatory necessity. Adhering to rigorous standards is critical for compliance and long-term success. Organizations that neglect formal quality measurement processes risk financial setbacks and potential regulatory penalties.

This is where innovative solutions play a crucial role. Scimus integrates data quality into every phase of healthcare analytics. By leveraging custom-built systems, HIPAA-compliant workflows, and machine learning-powered anomaly detection, Scimus ensures data is accurate, secure, and ready for use right from the beginning. Their expertise in interoperability standards, such as FHIR and HL7, bridges gaps between systems like EHRs, labs, and billing platforms. The result? Unified, real-time patient records that clinicians can rely on. These tailored solutions lay the groundwork for fostering a lasting culture of quality.

However, building a true culture of quality requires more than just technology. It demands collaboration across departments, including IT, compliance, and clinical teams. Ongoing training and regular audits are also essential. When healthcare organizations start treating data as a strategic asset rather than a byproduct of care, they unlock its full potential - enabling everything from smoother daily operations to breakthroughs in population health and clinical research.

FAQs

What are the biggest challenges in ensuring high-quality data for healthcare analytics?

Ensuring reliable data in healthcare analytics is no small feat, and several challenges can directly affect both patient care and operational decision-making. One common issue stems from manual data entry errors or missing information - think incomplete fields or duplicate records. These slip-ups can undermine the accuracy and dependability of the data being analyzed. On top of that, delayed updates or the absence of real-time data can leave systems relying on outdated information, which weakens the performance of predictive models and clinical decision-making tools.

Another significant obstacle lies in the lack of standardization and interoperability across electronic health records (EHRs) and other systems. When data comes in inconsistent formats, integrating and analyzing it becomes a daunting task. Lastly, weak data governance practices - like limited automated quality checks or inadequate oversight - can let errors slip through the cracks and spread across analytics processes.

Tackling these issues is essential to making healthcare analytics platforms a reliable source of accurate and actionable insights, ultimately empowering better clinical and operational decisions.

How does poor data quality affect patient safety and healthcare outcomes?

Poor data quality poses a serious threat to patient safety and healthcare outcomes by introducing errors into clinical decisions. When data is inaccurate or incomplete - like an incorrect diagnosis, missing lab results, or delayed updates - it can lead predictive models or electronic health records to suggest flawed recommendations. The consequences? A heightened risk of misdiagnosis, inappropriate treatments, or delays in urgent care.

Take this example: a single data entry mistake could lead to administering the wrong medication or dosage. This kind of error can result in severe harm, or in worst cases, even death. Beyond the immediate danger to patients, such mistakes also undermine trust in healthcare systems, increase the frequency of adverse events, and drive up costs due to the need for corrective care. Prioritizing accurate, complete, and timely data is crucial to protecting patients and delivering high-quality care.

How can healthcare organizations ensure high-quality data for analytics?

Healthcare organizations can boost the reliability of their data by weaving quality checks into every stage of the data lifecycle. Think of data as a product - one that requires clear specifications and attention to structural details like missing fields, inconsistent formats, and duplicate entries. Partnering with subject-matter experts is key to defining what "accurate data" means for specific clinical or operational purposes.

To make this approach actionable, start with a detailed data-quality plan. Regularly profile your data to spot anomalies, and implement validation measures at the point of entry to catch errors before they ripple through the system. Assign clear data ownership roles, designate data stewards, and deploy automated rule-based checks to keep quality intact over time. These steps ensure that analytics platforms work with dependable, ready-to-use data, ultimately driving better insights and enhancing patient care.