Building a multi-tenant SaaS platform can save costs and improve efficiency, but only if done securely and thoughtfully. Here's what you need to know:

- Multi-tenancy allows multiple customers (tenants) to share the same infrastructure while keeping their data isolated.

- Key challenges include tenant isolation, preventing data leaks, handling performance issues (like "noisy neighbors"), and scaling efficiently.

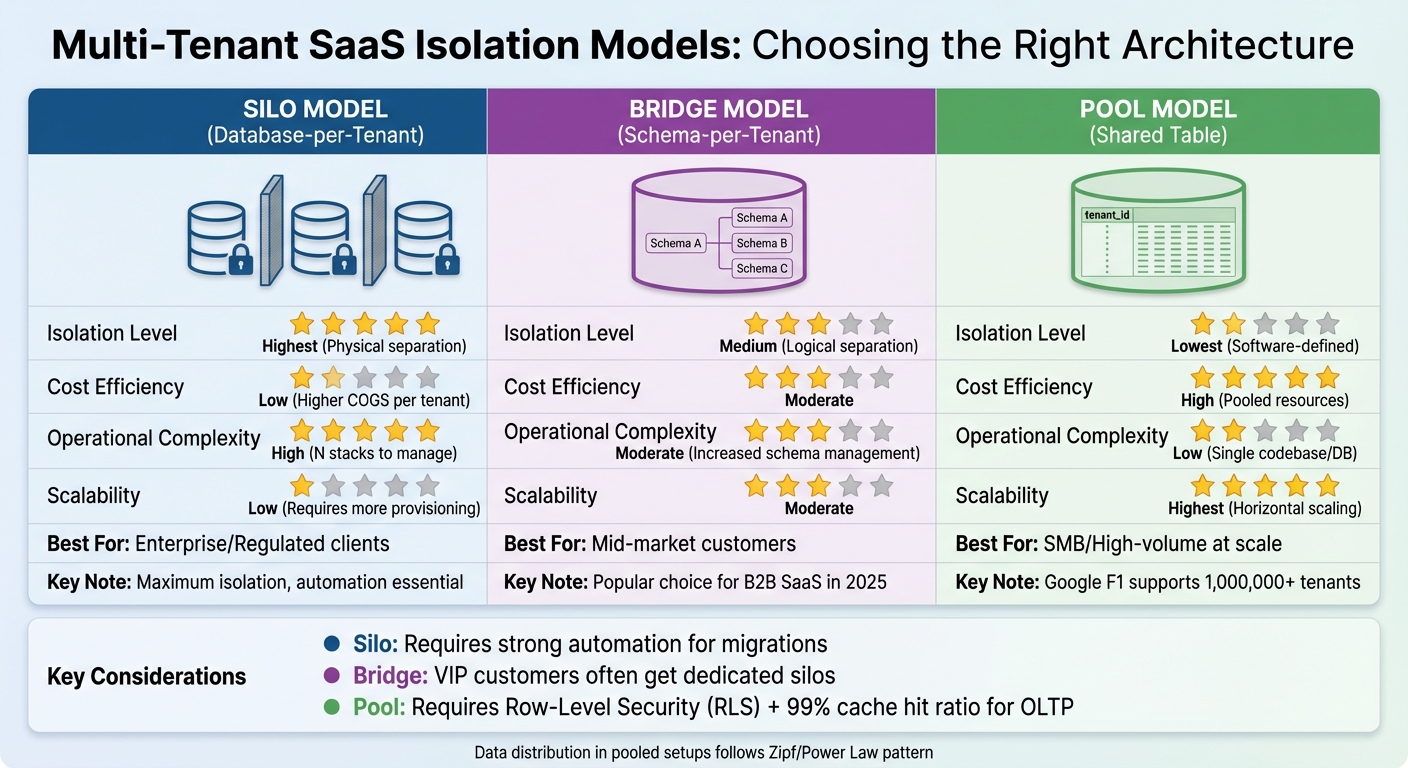

- Three main isolation models:

- Silo (DB-per-tenant): High isolation, costly, suited for enterprise clients.

- Bridge (Schema-per-tenant): Balanced isolation and cost, popular for mid-sized clients.

- Pool (Shared Table): Cost-effective, less secure, ideal for smaller tenants at scale.

- Scalability and security must work hand-in-hand, with safeguards like Row-Level Security (RLS), tenant-aware authentication, and robust monitoring.

- Tools like Kubernetes and cloud services (e.g., AWS Lambda, Aurora Serverless) help manage resources and scale infrastructure.

Key takeaway: Success depends on early decisions about architecture, isolation, and security. Avoiding mistakes like data leaks or poor scaling ensures your platform grows efficiently without compromising tenant trust.

Building Multi-Tenant SaaS Architectures • Tod Golding & Bill Tarr • GOTO 2024

Key Architecture Decisions for Multi-Tenant SaaS

Multi-Tenant SaaS Isolation Models Comparison: Silo vs Bridge vs Pool

The architecture decisions you make for your SaaS platform will shape its ability to scale and remain manageable over time. At the core of these decisions are three main tenant isolation models: Silo (database-per-tenant), Bridge (schema-per-tenant), and Pool (shared database). Each model comes with its own set of trade-offs, influencing costs, security, and operational complexity.

Tenant Isolation Models

The Silo Model assigns each tenant their own dedicated database instance. This ensures maximum isolation, making it the go-to choice for enterprise clients with strict compliance demands or performance guarantees. However, this model can be expensive and operationally demanding, especially as the number of tenants increases. To handle the complexity of managing multiple databases, automation for migrations and updates becomes essential.

On the other end of the spectrum, the Pool Model consolidates all tenants into shared database tables, differentiating data with a tenant_id column. This approach is highly cost-effective and scales well, even for a massive number of tenants. For instance, Google F1, the database behind AdWords, supports over 1,000,000 tenants using this shared model. The downside? It relies entirely on software-defined isolation. A single bug in your application logic could lead to one tenant's data being exposed to another.

The Bridge Model strikes a balance between the two extremes, assigning each tenant a separate schema within a shared database. This approach offers better isolation than pooled models without the overhead of managing dedicated databases. It's a popular choice for B2B SaaS platforms as of 2025, where most tenants share resources, but high-value or regulated clients - often referred to as "VIP" customers - are placed in dedicated silos.

In pooled setups, data distribution often follows a Zipf/Power Law pattern, where the largest tenant represents a smaller fraction of the total as the number of tenants grows.

Data Partitioning and Access Management

Selecting an isolation model is just the beginning. You also need robust data segregation mechanisms to ensure tenant security. For pooled models, Row-Level Security (RLS) has become a standard practice, enforcing isolation directly at the database engine level. This method adds an extra layer of protection, ensuring that even if your application logic fails, the database itself blocks unauthorized cross-tenant access.

Tenant context should always be derived from secure, server-side authentication claims, such as JWTs. Avoid relying on client-sent tenant IDs, as this can open the door to manipulation.

For compliance with regulations like GDPR or HIPAA, physical partitioning (as with the Silo Model) is often necessary. However, logical partitioning (used in the Pool Model) is typically sufficient for most commercial SaaS applications. In pooled environments, it's crucial to maintain a 99% cache hit ratio for OLTP applications. If your database frequently accesses the disk, it's time to scale out to a distributed model.

To enhance observability and prevent data leaks, prefix cache keys, logs, and metrics with tenant IDs.

Comparison Table: Tenant Isolation Models

| Model | Isolation Level | Cost Efficiency | Operational Complexity | Scalability | Best For |

|---|---|---|---|---|---|

| Silo (DB-per-tenant) | Highest (Physical separation) | Low (Higher COGS per tenant) | High (N stacks to manage) | Low (Requires more provisioning) | Enterprise/Regulated clients |

| Bridge (Schema-per-tenant) | Medium (Logical separation) | Moderate | Moderate (Increased schema management) | Moderate | Mid-market customers |

| Pool (Shared Table) | Lowest (Software-defined) | High (Pooled resources) | Low (Single codebase/DB) | Highest (Horizontal scaling) | SMB/High-volume at scale |

The Pool Model requires strong safeguards to prevent the "noisy neighbor" problem, where one tenant's heavy activity impacts the performance of others. For Silo Models, automating schema migrations is key to avoiding operational hiccups. With these isolation models and data management practices in place, the next step is to explore tenant-aware security measures and efficient routing strategies.

Security and Tenant Routing

Continuing from our earlier discussion on tenant isolation models, let’s dive into how to secure and route tenant-specific requests. Once you've settled on an isolation model, it's essential to secure every request with two critical layers: authentication and tenant isolation enforcement. These steps ensure that each request is accurately tied to its respective tenant.

Tenant-Aware Authentication and Authorization

A secure multi-tenant system starts with establishing a "SaaS identity", which binds a user's identity directly to a tenant ID. This tenant context should be a core part of your authentication model. A common approach is using JSON Web Tokens (JWTs) that include custom claims like tenant_id or tier. These claims are embedded by your identity provider - such as Amazon Cognito, Auth0, or Okta - during login. This design enables a stateless authentication system.

For authorization, consider implementing a Policy Decision Point (PDP) to evaluate access based on declarative policies. These policies, written in languages like Cedar (used by Amazon Verified Permissions) or Rego (used by Open Policy Agent), enforce rules such as ensuring the tenant_id in the JWT matches the resource being accessed. This approach minimizes risks from accidental misconfigurations and simplifies auditing.

"In well-designed SaaS applications, this authorization step should not rely on a centralized authorization service. A centralized authorization service is a single point of failure in an application."

- AWS Security Blog

For pooled models, JWTs can also provide the tenant context needed to obtain temporary, tenant-scoped credentials through services like AWS Security Token Service (STS).

Once authentication is secure, the next focus is routing requests to the correct resources for each tenant.

Routing and Service Distribution

Tenant routing ensures that requests are directed to the right infrastructure, depending on your chosen isolation model. In silo architectures - where each tenant has its own dedicated resources - DNS-based routing is commonly used, with tools like Amazon Route 53. Each tenant is assigned a unique subdomain (e.g., acme.yourapp.com), which directs traffic to their specific VPC or database.

For pooled or bridge models, tenant resolution happens dynamically at runtime, often at the API Gateway level. This is typically achieved by inspecting the JWT header or the URL path.

To maintain performance and prevent bottlenecks, implement per-tenant rate limiting and weighted queues based on tenant tiers. Additionally, include the tenant_id in cache keys (e.g., tenant:123:resource:456) to prevent accidental cross-tenant data exposure.

Another critical feature is incorporating tenant-level kill switches into your routing layer. These switches allow you to instantly disable a specific tenant’s access in cases of abusive behavior or security concerns, while leaving the rest of the platform unaffected. As Maria Paktiti from WorkOS aptly notes:

"If your system doesn't make it hard to accidentally ignore tenant boundaries, you're not multi-tenant yet, you're multi-customer".

Scaling Infrastructure with Kubernetes and Cloud Tools

To support growth effectively, building scalable infrastructure is key. Kubernetes has emerged as the preferred orchestration platform for multi-tenant SaaS applications, but getting it right takes careful planning. As AWS SaaS Factory architects Toby Buckley and Ranjith Raman explain:

"The programming model, cost efficiency, security, deployment, and operational attributes of EKS represent a compelling model for SaaS providers".

Containerization and Orchestration

While Kubernetes' default control plane is designed for single-tenancy, you can configure it to support multi-tenant isolation. This requires choosing between three main isolation models: namespace-per-tenant, virtual control planes, or cluster-per-tenant. For most SaaS platforms, the namespace-per-tenant model strikes the best balance between cost and isolation, using logical separation through RBAC and network policies.

To manage resources effectively in a namespace-per-tenant setup, implement ResourceQuotas, LimitRanges, and a default-deny network policy with CNI plugins like Calico or Cilium. These settings limit CPU, memory, and the number of pods a tenant can create. If stronger isolation is required, you can assign specific nodes to particular tenants.

Streamline tenant onboarding with Infrastructure as Code tools such as Terraform or AWS CloudFormation. When a new customer signs up, automate the creation of their namespace, apply RBAC policies, set network configurations, and deploy their microservices through your CI/CD pipeline. This approach not only simplifies onboarding but ensures consistency in tenant environments. Security can be further reinforced by using admission controllers like OPA Gatekeeper or Kyverno to enforce policies - blocking privileged containers or preventing host path mounts.

With these foundations in place, integrating serverless and cloud provisioning tools can take scalability to the next level.

Cloud Provisioning and Serverless Components

By combining Kubernetes with serverless components, you can optimize both cost and scalability. AWS Lambda and Aurora Serverless, for instance, adjust resource allocation based on demand, so you only pay for what you use. Lambda Authorizers can validate JWTs and inject tenant-specific context into execution environments, enabling dynamic creation of scoped IAM policies that restrict access to each tenant's data.

To ensure high availability, deploy infrastructure across at least three Availability Zones. Amazon Aurora safeguards your data by storing six copies across these zones, protecting against potential data center failures. Additionally, configure API Gateway usage plans with tenant-specific API keys. This setup enforces tier-based throttling, preventing any single tenant's activity from affecting the performance of others.

sbb-itb-116e29a

Performance Optimization and Monitoring

Once your platform is live, it's crucial to ensure that every tenant enjoys smooth and reliable performance. Without proper monitoring and optimization, a single high-traffic tenant can disrupt the experience for others - commonly known as the "noisy neighbor" effect. To prevent this, you need strategies that work hand-in-hand with the secure and scalable infrastructure practices already in place.

Tenant-Aware Caching and Metrics

Caching can significantly reduce database load and improve response times, but in a multi-tenant system, it comes with risks. To avoid data leaks, always include the tenant_id in cache keys when using tools like Redis or Memcached. This ensures that one tenant’s data is never mistakenly served to another. Maintaining tenant boundaries at every layer, including caching, is non-negotiable for proper data isolation.

For example, using Redis with tenant-specific namespaces and composite indexes (which include tenant_id) has been shown to cut database CPU usage by 40% and reduce API response times from 400 ms to 120 ms. When caching authorization data, include not only the tenant_id but also user_id and a role version identifier. Alternatively, set a short time-to-live (TTL) to minimize the risk of stale permissions being used.

To enhance observability, label logs, metrics, and traces with tenant_id. This makes it easier to identify resource-heavy tenants and troubleshoot issues quickly without affecting others. Additionally, consider building tenant-level kill switches. These allow you to disable a specific tenant’s access or background processes without bringing down the entire system. Together, these caching and observability practices ensure tenant-specific data remains properly isolated.

Observability and Monitoring Tools

Beyond caching, robust observability ties together logs, metrics, and traces to create actionable insights. These three elements - logs (timestamped events), metrics (numerical system health data), and traces (end-to-end request flows) - provide a comprehensive view of your system's behavior. Observability goes beyond traditional monitoring by addressing unpredictable issues ("unknown unknowns") in complex microservice architectures, as opposed to just predefined thresholds ("known unknowns").

OpenTelemetry has become the go-to standard for vendor-neutral telemetry collection, separating data generation from analysis tools. Leading platforms include Datadog (4.5/5 rating with 859 reviews), New Relic (4.6/5 with 1,454 reviews), and Grafana Cloud (4.6/5 with 220 reviews). As of 2025, Grafana Cloud serves over 25 million users worldwide. For database performance, tools like Amazon RDS Performance Insights and Enhanced Monitoring can track workload and system-level metrics across tenants.

Set up alerts to notify your team when specific tenants exceed resource limits or encounter issues, rather than just monitoring overall system health. Many observability platforms now incorporate AI and machine learning to automatically detect anomalies, helping reduce alert fatigue for operations teams.

Cost and Resource Optimization

As your platform grows, managing cloud costs becomes increasingly important. To align expenses with usage, implement metering for usage-based pricing models. In Kubernetes environments, use ResourceQuotas and LimitRanges at the namespace level to prevent any single tenant from monopolizing cluster resources. For serverless services like AWS Lambda, apply reserved concurrency limits to ensure premium tenants maintain high performance while capping usage for others.

You might also explore tiered performance models. For example, premium tenants could receive dedicated caching and higher resource allocations, while lower-tier tenants operate under throttling limits. This combination of a "Silo Model" for premium users and a "Pool Model" for smaller tenants strikes a balance between isolation and cost efficiency. By closely monitoring per-tenant resource usage - across compute, storage, and networking - you can correlate these costs with pricing to maintain profitability.

Partnering with Scimus for SaaS Development

Creating a secure, scalable multi-tenant platform requires making smart architectural decisions from the start. Early choices - like selecting the right isolation model or implementing tenant-aware routing - can shape whether your platform runs smoothly or becomes a maintenance headache. As discussed earlier, these technical decisions are crucial, and Scimus brings expertise to help you make them effectively. By focusing on these core elements, Scimus delivers tailored, scalable solutions that meet your operational and compliance demands.

Custom Architecture and Development

Scimus uses proven strategies to design multi-cluster Amazon EKS architectures that meet the unique needs of your SaaS platform. For businesses requiring strict regulatory or security compliance, Scimus implements physical isolation by distributing workloads across dedicated tenant clusters, ensuring sensitive data stays secure. This setup often includes hub-and-spoke networking through AWS PrivateLink, which facilitates private, encrypted communication between tenant-specific VPCs and shared services, keeping both compute and networking resources isolated.

To streamline operations, Scimus employs automated onboarding pipelines using infrastructure-as-code. This approach ensures new tenant environments, including databases and routing configurations, are provisioned consistently and efficiently as your platform grows. Automation minimizes manual errors and helps maintain flexibility, even at scale.

Industry-Specific Scaling Solutions

Scimus goes beyond generic solutions by tailoring strategies to industries with complex regulatory needs, such as healthcare and fintech. Dedicated domain experts ensure compliance by implementing robust isolation measures that go beyond basic authentication and authorization. These measures are designed to prevent critical errors, such as crossing tenant boundaries, which could be catastrophic for a SaaS business.

To tackle multi-tenant challenges, Scimus prioritizes preventing data leakage, managing resource usage, and enforcing strict tenant isolation. For businesses with diverse tenant profiles, Scimus offers hybrid architectures. These allow high-compliance or high-traffic clients to operate on siloed infrastructure, while other tenants share a more cost-effective, pooled model. This flexibility helps you balance security needs with operational costs as your platform expands.

Conclusion

Key Steps Recap

Creating a scalable multi-tenant SaaS platform starts with making informed, strategic decisions early on. One of the most critical steps is implementing robust tenant isolation as a separate layer from authentication to prevent any risk of data crossover between tenants. Choosing the right data partitioning model - whether silo, bridge, or pool - should align with your compliance needs and cost considerations. Automating tenant lifecycle management through a centralized control plane can save you from the headaches of manual deployments and streamline operations.

When it comes to database design, scalability is key. A shared table with a tenant_id column is a practical solution for handling over 100,000 tenants efficiently. Additionally, tenant-aware routing at your platform's entry point ensures smooth operations, while monitoring tools can help mitigate the "noisy neighbor" effect - where one tenant's resource-heavy activity could impact others' performance. These steps underscore the importance of careful planning and technical expertise in building a sustainable SaaS platform.

Why Expertise Matters

The challenges of multi-tenancy require more than just technical know-how - they demand specialized expertise. Having experienced professionals on board can make all the difference, helping you balance the trade-offs between isolation, cost, and operational efficiency. As AWS aptly states:

"Crossing this boundary [tenant isolation] in any form would represent a significant and potentially un-recoverable event for a SaaS business".

Real-world examples highlight the value of expert guidance. For instance, in December 2025, a SaaS productivity company transitioned to a hybrid architecture with the help of seasoned professionals. The results? A 40% reduction in database CPU usage and a dramatic improvement in API response times - from 400ms to just 120ms. This kind of expertise not only helps you avoid pitfalls like data leaks or scaling issues but also ensures that your platform is ready to grow alongside your expanding tenant base.

FAQs

What’s the difference between Silo, Bridge, and Pool isolation models in multi-tenant SaaS?

The Silo, Bridge, and Pool models represent different strategies for managing tenant isolation in multi-tenant SaaS platforms. Each approach comes with its own set of benefits and trade-offs when it comes to security, cost, and scalability.

- Silo isolation: This model assigns dedicated resources - like databases or compute instances - to each tenant. It offers strong security and ensures that one tenant's performance won't affect another's. However, this approach can be more expensive and may not scale as easily.

- Pool isolation: Here, infrastructure is shared among all tenants, with a tenant identifier used to keep data separate. It’s a cost-effective solution and scales efficiently, though it demands rigorous safeguards to prevent data breaches or performance disruptions.

- Bridge isolation: This hybrid model blends the two approaches. Non-critical components, such as web servers, are shared for efficiency, while critical resources like storage are isolated to maintain security and performance. This setup provides a flexible balance between cost savings and resource isolation, tailored to specific requirements.

Each model serves a distinct purpose, and choosing the right one depends on the platform's priorities and tenant needs.

How can I protect data and prevent breaches in a multi-tenant SaaS platform?

To protect data in a multi-tenant SaaS platform, start by enforcing strict tenant isolation. This can be achieved by using separate accounts, virtual private clouds (VPCs), or dedicated containers for each tenant. Strengthen this setup with application-level measures like role-based access control (RBAC) and dynamically generated permissions to ensure every request is securely tied to the appropriate tenant.

At the database level, select an isolation strategy that matches your security requirements. Options include using separate databases, schemas, or sharded tables with tenant-specific keys. To protect data in transit and at rest, rely on TLS/HTTPS and encryption methods that incorporate customer-managed keys. Regularly rotate encryption keys and securely store sensitive information using a dedicated secrets manager.

On top of these measures, prioritize continuous monitoring and anomaly detection. Keep detailed logs of access events, monitor for unusual activity, and set up alerts for anything suspicious. Embrace a least-privilege access model, schedule routine penetration tests, and automate compliance checks to reduce the risk of breaches. By combining these strategies, you can create a solid defense against data leaks and unauthorized access.

How can I prevent one tenant from overusing resources in a multi-tenant SaaS platform?

To tackle the 'noisy neighbor' problem - where one tenant uses up more than their fair share of shared resources - you’ll need a mix of proactive and reactive strategies. Start by setting resource limits for CPU, memory, and storage at the cluster level. Tools like Kubernetes namespaces and RBAC can help enforce these quotas, ensuring tenants don’t exceed their allocated share. For customers with higher priorities or sensitive needs, you might want to set up dedicated resource pools to keep their workloads separate from the shared infrastructure.

On top of that, use dynamic throttling policies to manage tenant requests and keep workloads balanced across the platform. Tools like CloudWatch are invaluable for monitoring usage patterns. They can even trigger auto-scaling or send alerts when a tenant is approaching their limits. These steps help ensure resources are distributed fairly while keeping the platform stable and performing well for everyone.